Exploring the Ethics Behind Self-Driving Cars

How do you code ethics into autonomous automobiles? And who is responsible when things go awry?

August 13, 2015

Illustration by Abigail Goh

Imagine a runaway trolley barreling down on five people standing on the tracks up ahead. You can pull a lever to divert the trolley onto a different set of tracks where only one person is standing. Is the moral choice to do nothing and let the five people die? Or should you hit the switch and therefore actively participate in a different person’s death?

In the real world, the “trolley problem” first posed by philosopher Philippa Foot in 1967 is an abstraction most won’t ever have to actually face. And yet, as driverless cars roll into our lives, policymakers and auto manufacturers are edging into similar ethical dilemmas.

For instance, how do you program a code of ethics into an automobile that performs split-second calculations that could harm one human over another? Who is legally responsible for the inevitable driverless-car accidents — car owners, carmakers, or programmers? Under what circumstances is a self-driving car allowed to break the law? What regulatory framework needs to be applied to what could be the first broad-scale social interaction between humans and intelligent machines?

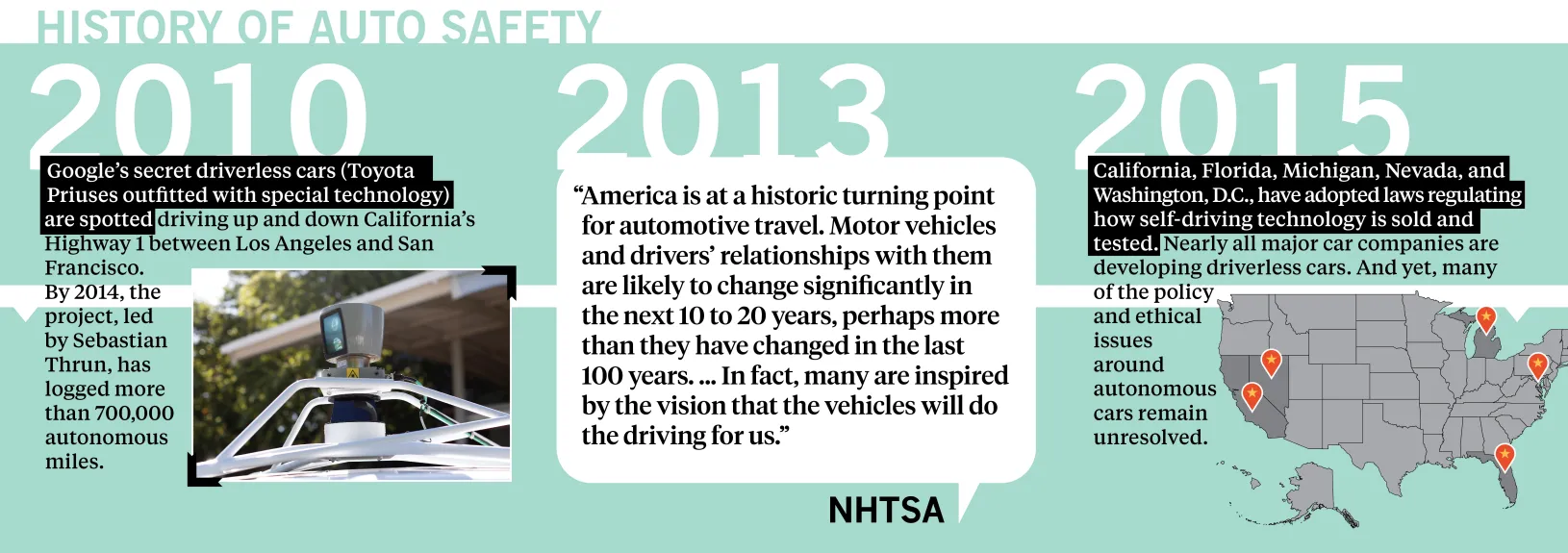

Ken Shotts and Neil Malhotra, professors of political economy at Stanford GSB, along with Sheila Melvin, mull the philosophical and psychological issues at play in a new case study titled “‘The Nut Behind the Wheel’ to ‘Moral Machines’: A Brief History of Auto Safety.” Shotts discusses some of the issues here:

What are the ethical issues we need to be thinking about in light of driverless cars?

This is a great example of the “trolley problem.” You have a situation where the car might have to make a decision to sacrifice the driver to save some other people, or sacrifice one pedestrian to save some other pedestrians. And there are more subtle versions of it. Say there are two motorcyclists, one is wearing a helmet and the other isn’t. If I want to minimize deaths, I should hit the one wearing the helmet, but that just doesn’t feel right.

These are all hypothetical situations that you have to code into what the car is going to do. You have to cover all these situations, and so you are making the ethical choice up front.

It’s an interesting philosophical question to think about. It may turn out that we’ll be fairly consequentialist about these things. If we can save five lives by taking one, we generally think that’s something that should be done in the abstract. But it is something that is hard for automakers to talk about because they have to use very precise language for liability reasons when they talk about lives saved or deaths.

What are the implications of having to make those ethical choices in advance?

Right now, we make those instinctive decisions as humans based on our psychology. And we make those decisions erroneously some of the time. We make mistakes, we mishandle the wheel. But we make gut decisions that might be less selfish than what we would do if we were programming our own car. One of the questions that comes up in class discussions is whether, as a driver, you should be able to program a degree of selfishness, making the car save the driver and passengers rather than people outside the car. Frankly, my answer would be very different if I were programming it for driving alone versus having my 7-year-old daughter in the car. If I have her in the car, I would be very, very selfish in my programming.

Who needs to be taking the lead on parsing these ethical questions — policymakers, the automotive industry, philosophers?

The reality is that a lot of it will be what the industry chooses to do. But then policymakers are going to have to step in at some point. And at some point, there are going to be liability questions.

There are also questions about breaking the law. The folks at the Center for Automotive Research at Stanford have pointed out that there are times when normal drivers do all sorts of illegal things that make us safer. You’re merging onto the highway and you go the speed of traffic, which is faster than the speed limit. Someone goes into your lane and you briefly swerve into an oncoming lane. In an autonomous vehicle, is the “driver” legally culpable for those things? Is the automaker legally culpable for it? How do you handle all of that? That’s going to need to be worked out. And I don’t know how it is going to be worked out, frankly. Just that it needs to be.

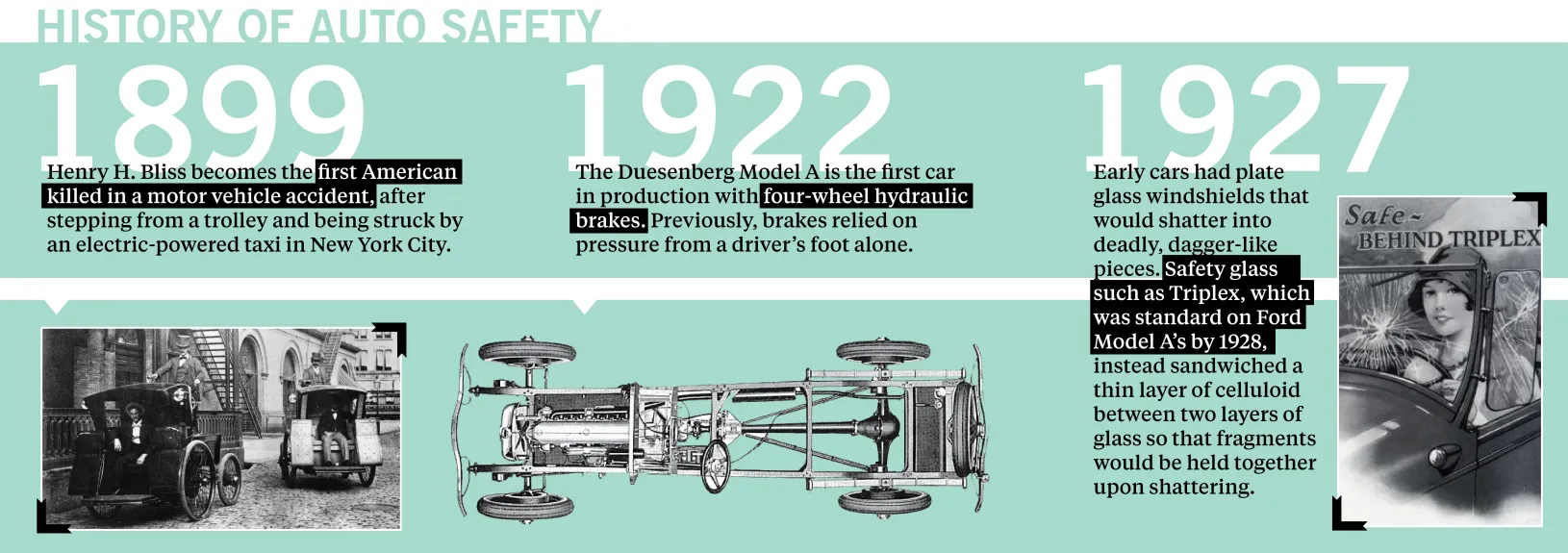

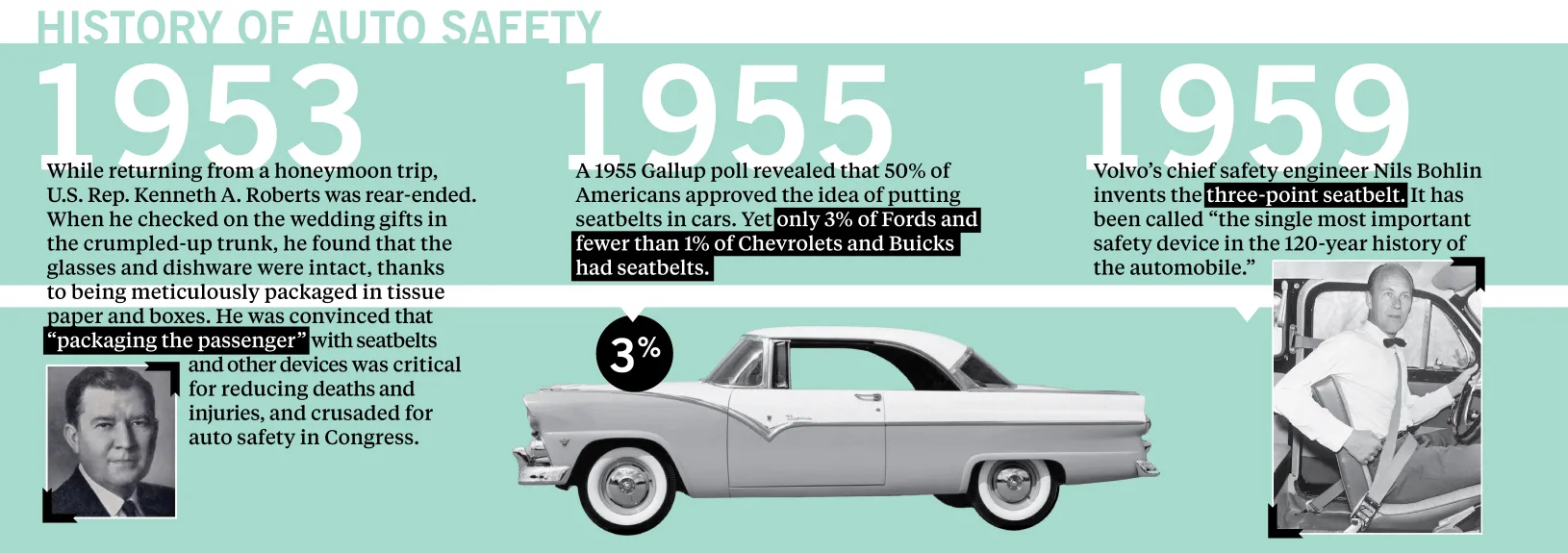

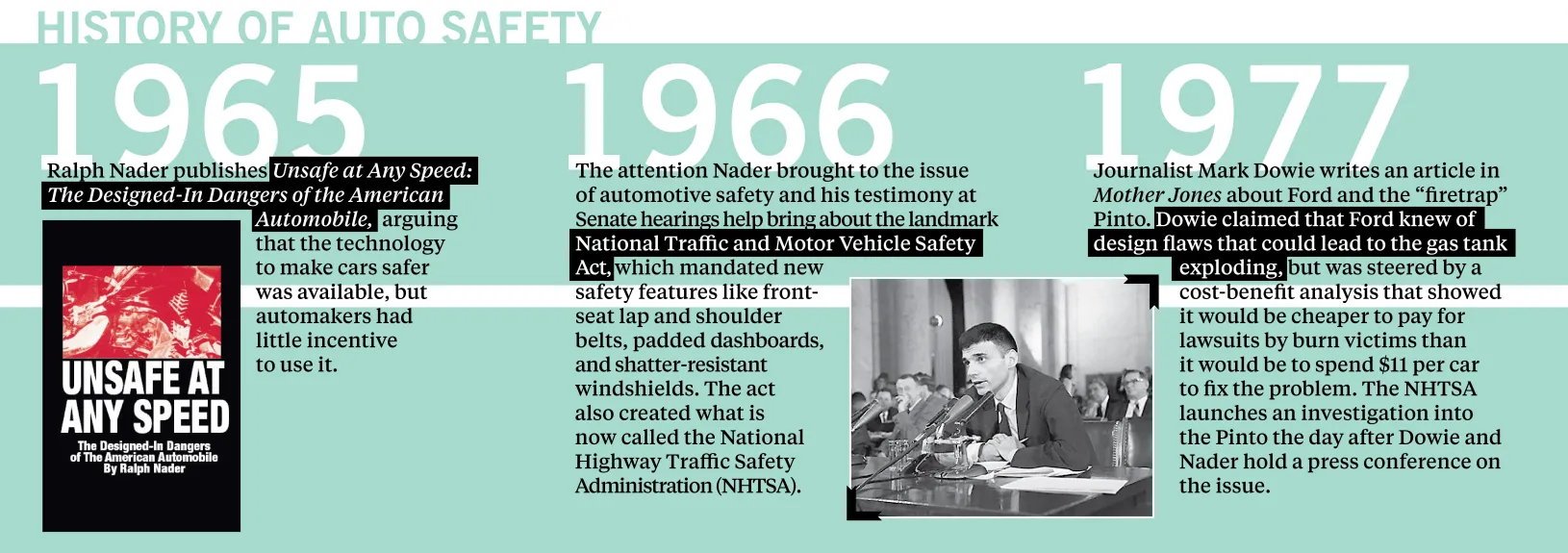

Are there any lessons to be learned from the history of auto safety that could help guide us?

Sometimes eliminating people’s choices is beneficial. When seatbelts were not mandatory in cars, they were not supplied in cars, and when they were not mandatory to be used, they were not used. Looking at cost-benefit analysis, seatbelts are incredibly cost effective at saving lives, as is stability control. There are real benefits to having things like that mandated so that people don’t have the choice not to buy them.

The liability system can also induce companies to include automated safety features. But that actually raises an interesting issue, which is that in the liability system, sins of commission are punished more severely than sins of omission. If you put in airbags and the airbag hurts someone, that’s a huge liability issue. Failing to put in the airbag and someone dies? Not as big of an issue. Similarly, suppose that with a self-driving car, a company installs safety features that are automated. They save a lot of lives, but some of the time they result in some deaths. That safety feature is going to get hit in the liability system, I would think.

What sort of regulatory thickets are driverless cars headed into?

When people talk about self-driving cars, a lot of the attention falls on the Google car driving itself completely. But this really is just a progression of automation, bit by bit by bit. Stability control and anti-lock brakes are self-driving–type features, and we’re just getting more and more of them. Google gets a lot of attention in Silicon Valley, but the traditional automakers are putting this into practice.

So you could imagine different platforms and standards around all this. For example, should this be a series of incremental moves or should it be a big jump all the way to a Google-style self-driving car? Setting up different regulatory regimes would favor one of those approaches over the other. I’m not sure whether it’s the right policy, but incremental moves could be a good policy. But it also would be really good from the perspective of the auto manufacturers, and less good from the perspective of Google. And it could be potentially to a company’s advantage if they could try to influence the direction that the standards go in a way that favors their technology. This is something that companies moving into this area have to think about strategically, in addition to thinking about the ethical stuff.

What other big ethical questions do you see coming down the road?

At some point, do individuals get banned from having the right to drive? It sounds really far-fetched now. Being able to hit the road and drive freely is a very American thing to do. It feels weird to take away something that feels central to a lot of people’s identity.

But there are precedents for it. The one that Neil Malhotra, one of my coauthors on this case, pointed out is building houses. This used to be something we all did for ourselves with no government oversight 150 years ago. That’s a very immediate thing — it’s your dwelling, your castle. But if you try to build a house in most of the United States nowadays, there are all sorts of rules for how you have to do the wiring, how wide this has to be, how thick that has to be. Every little detail is very, very tightly regulated. Basically, you can’t do it yourself unless you follow all those rules. We’ve taken that out of individuals’ hands because we viewed there were beneficial consequences of taking it out of individuals’ hands. That may well happen for cars.

Graphics sources: newyorkologist.org; oldcarbrocheres.com; National Museum of American History; Academy of Achievement; iStock/hxdbzxy; Reuters/Stephen Lam.

For media inquiries, visit the Newsroom.

Explore More

A/B Testing Gets an Upgrade for the Digital Age

Experiments Inspired by Slot Machines Promise Bigger Research Payoffs